At 31C3 this year, Eric Filiol and Paul Irolla from Laboratoire de Cryptologie et Virologie Opérationnelles presented on (In)security of mobile banking app security. While I appreciate the effort to draw more attention to the insecurity of mobile applications in general, I am afraid that the talk itself was based on quite a few misconceptions, and thus gave a very wrong impression of how app development actually works and about why the code we see is as insecure as it is. Unfortunately, these misconceptions were readily amplified through the mass media (the Zeit, for instance), which is why I think someone with more experience in the field should probably clarify a few things in this respect.

At 31C3 this year, Eric Filiol and Paul Irolla from Laboratoire de Cryptologie et Virologie Opérationnelles presented on (In)security of mobile banking app security. While I appreciate the effort to draw more attention to the insecurity of mobile applications in general, I am afraid that the talk itself was based on quite a few misconceptions, and thus gave a very wrong impression of how app development actually works and about why the code we see is as insecure as it is. Unfortunately, these misconceptions were readily amplified through the mass media (the Zeit, for instance), which is why I think someone with more experience in the field should probably clarify a few things in this respect.

Vulnerabilities shown were not related to banking

First of all, Filiol and Irolla showed no single banking-related vulnerability. What they showed were a series of potential vulnerabilities, none of which they showed to be exploitable. Both authors also mentioned that it was hard to get access to banking staff that could fix the problems that they found. Well, this is not surprising, and both in combination is dangerous.

It’s not the banks who develop banking apps

First of all, why is it not surprising? Well, guess what, it’s not the banks who actually develop banking apps. Banks are known to be in the business of investing and making money, not in the business of software development. In result, 99% of them will outsource the development of such apps completely. While it makes sense to approach the banks directly if a security flaw is found, it’s not surprising that no one there will be able to solve the problem at hand. This problem then gets amplified if researchers such as Filiol and Irolla go public with alleged (!) insecurities for which they have not really shown that they are exploitable. When talking to banks (or any company for that matter) about the insecurity of their software it really helps to have convincing arguments. And also then, responsible disclosure should be preferable to a rushed talk at 31C3.

It’s primarily not the banks who make the mistakes

The second major problem with the talk was that all the alleged vulnerabilities presented were basically not in the apps’ code itself but was in third-party code, and were not even vulnerabilities. The authors complained about “extra functionality” that was present in the apps and which seemed dangerous and not privacy preserving. They showed instances of the RootTools library being used, and instances of the usage of geo-location services by Yandex (in an app by Sberbank). For using RootTools, however, the authors themselves presented some good reasons, and Sberban is a Russian bank, and Yandex is a russian geo-location service. So there should be little surprise that Yandex code is present in such an app. There is also good reason for most banking apps to use geo location information: many of those apps have a functionality to show you the branch closest to your current location.

So the problem is not really that banks would somehow be evil and include malicious code in their apps, it’s instead simply that the companies who develop apps for banks use somewhat sub-optimal ways of achieving the functionalities of those apps. I agree that this is bad, but nevertheless this paints a picture much different from and much more muted than what was presented in the talk.

Ok, but what can we do about it?

To conclude, the primary problem with the apps that Filiol and Irolla actually presented is not that banks are somehow incapable of developing secure apps or even have evil hidden plans. The main problem is one of outsourcing and bad tools for quality control. And this is a problem seen throughout the software development industry. It’s not specific to banking and not specific to mobile apps. To counter this problem, the following is required:

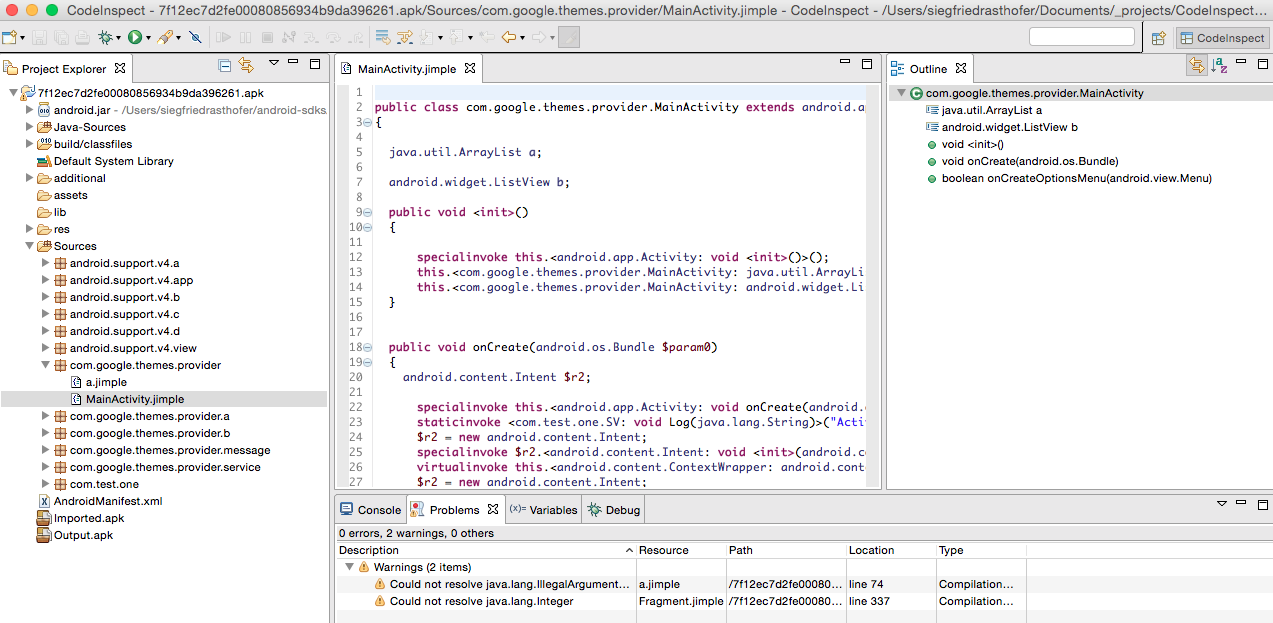

- Companies outsourcing development need to be given better tools to assess the security of the code they buy.

- Third-party security experts should be contracted to assure the security of such applications. Tools like CodeInspect can aid such experts.

- Likewise, app developers should be better aware of what third-party code they actually include in applications, and what the security implications will be.

- Also, it should be possible to hold app developers liable for code they develop for third parties, in particular when it comes to security issues.

So in result, as much as I myself would like to blame this all on the banks, I don’t think this is a fair assessment. It seems like if anyone is to blame then it is the software development industry (for following bad practices), politics (for not promoting better standards) and researchers, for bragging about alleged vulnerabilities rather than spending their time showing constructive ways to counter them. Plus, there is the press: While writing about vulnerabilities is considered sexy, writing about good security practices seems much less so. So also they are setting the wrong incentives!

Cross-posted from SEEBlog